Exploiting Moments of Vulnerability By Design

The recent revelations about Meta (formerly Facebook) allegedly serving beauty-related ads to teenagers immediately after they deleted a selfie have once again sparked outrage across media and civil society. As reported by Futurism, the recent book Careless People by Sarah Wynn-Williams suggests that Meta’s ad systems were designed to detect such behaviors—deleting a photo, a signal of possible insecurity—and trigger ads specifically crafted to exploit that state of mind.

As disturbing as this sounds (and is), let’s be honest: it is not surprising.

The Engineering of Influence

This isn’t a case of “bad actors” exploiting a loophole in the system. This is the system.

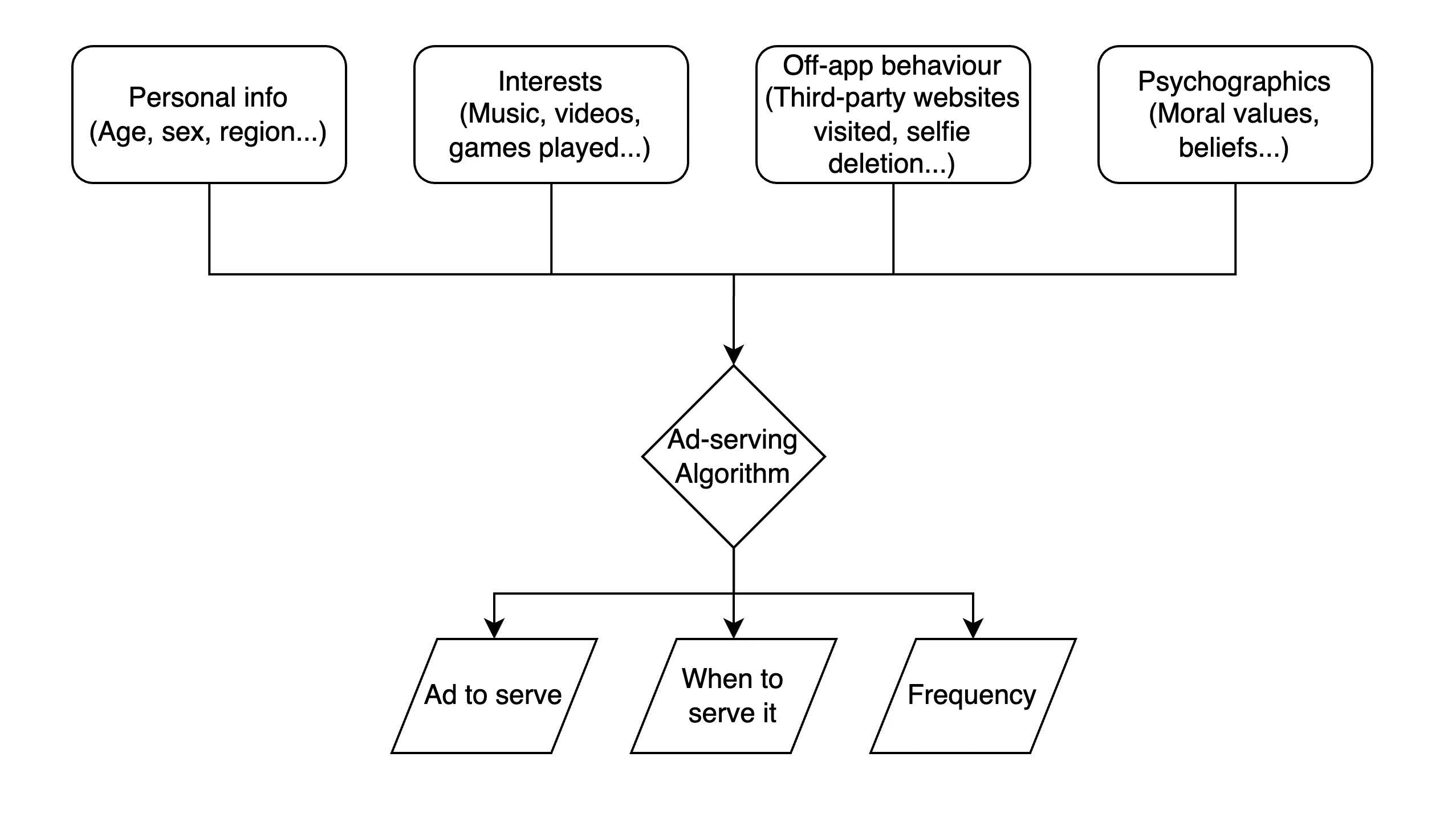

What the book hints at is simply a brutally effective application of behavioral prediction and optimization at scale. It’s an engineering solution to an economic problem: when is an ad most likely to convert? The answer, according to the data, is when users are emotionally vulnerable. And deleting a selfie? That’s a near-perfect proxy for such vulnerability, especially among young users.

We can dress it up in terms like “moment marketing” or “contextual targeting,” but at its core, it’s the industrialization of human psychology for profit.

None of Us Are Immune

It would be a mistake to frame this solely as a “teenager problem.” While teens are particularly susceptible due to their developmental stage, the truth is that none of us are immune to these mechanisms.

These systems are designed to exploit the universal human conditions of doubt, insecurity, and impulse. Whether it’s a fitness app subtly nudging you toward supplements after logging a bad run, or a job search site showing “confidence-boosting” courses after a rejection email, the pattern is the same.

And it’s all done by design.

The Inevitable Logic of Optimization

This is not a scandal of ethics gone astray inside a rogue team at Meta. It’s the logical outcome of optimization-driven systems where success is measured purely in clicks, conversions, and revenue. In such a system, the “right” thing to do from an engineering perspective is to maximize the ad’s effectiveness, regardless of the user’s well-being.

The problem is not the implementation, but the premise itself.

Beyond Outrage: Rethinking the System—and Our Role in It

The outrage, while justified, often focuses narrowly on the specific company or campaign, missing the forest for the trees. The real issue is systemic. As long as attention and behavior are the product, such exploitative dynamics are not anomalies—they are standard operating procedure.

If we want to change this, it won’t be enough to demand tweaks to algorithms. We need to rethink the very business models and incentives that drive these platforms.

Privacy-preserving technologies such as Homomorphic Encryption, the one we use at Dhiria, offer a promising direction to mitigate some of these issues. By enabling platforms to process data without directly accessing the user’s raw information, these technologies can help rebalance the power dynamics between service providers and users, putting more control in the hands of individuals.

But let’s be clear: these solutions are not a silverbullet. Skilled engineers and product designers can, and will, still find ways to infer, predict, and influence behaviors even within technically privacy-preserving architectures. Data may be encrypted, but patterns of interaction, metadata, and subtle signals can still be exploited, possibly leveraging the users' devices.

Therefore, users need to move beyond a naive trust in either regulation or technology. Awareness, literacy, and conscious decision-making become crucial. Every platform comes with trade-offs, and as users, we need to understand them—not just in terms of features or convenience, but in how they shape our behaviors and vulnerabilities. In the end, the system works exactly as designed. The question is: do we, as a society and as individuals, still want to participate in it as-is?

To read: