The European Union has decisively entered the era of artificial intelligence regulation. With the AI Act, the world’s first comprehensive and binding legal framework for artificial intelligence, Brussels has once again confirmed its role as a global trendsetter in regulation. Just as it had previously done with the GDPR, the AI Act is already influencing lawmakers well beyond Europe’s borders.

Across the Atlantic, the United States finds itself in a very different position. Despite being home to the world’s most powerful artificial intelligence companies and a key hub of innovation in the sector, the U.S. does not have a general federal law governing AI. Instead, the American approach appears fragmented: a combination of executive orders, sector-specific rules, and state-level legislation. However, with the European AI Act coming into force, signs are emerging that the United States, too, is absorbing elements of the European model, while still seeking to maintain its characteristic “light-touch” approach to regulation.

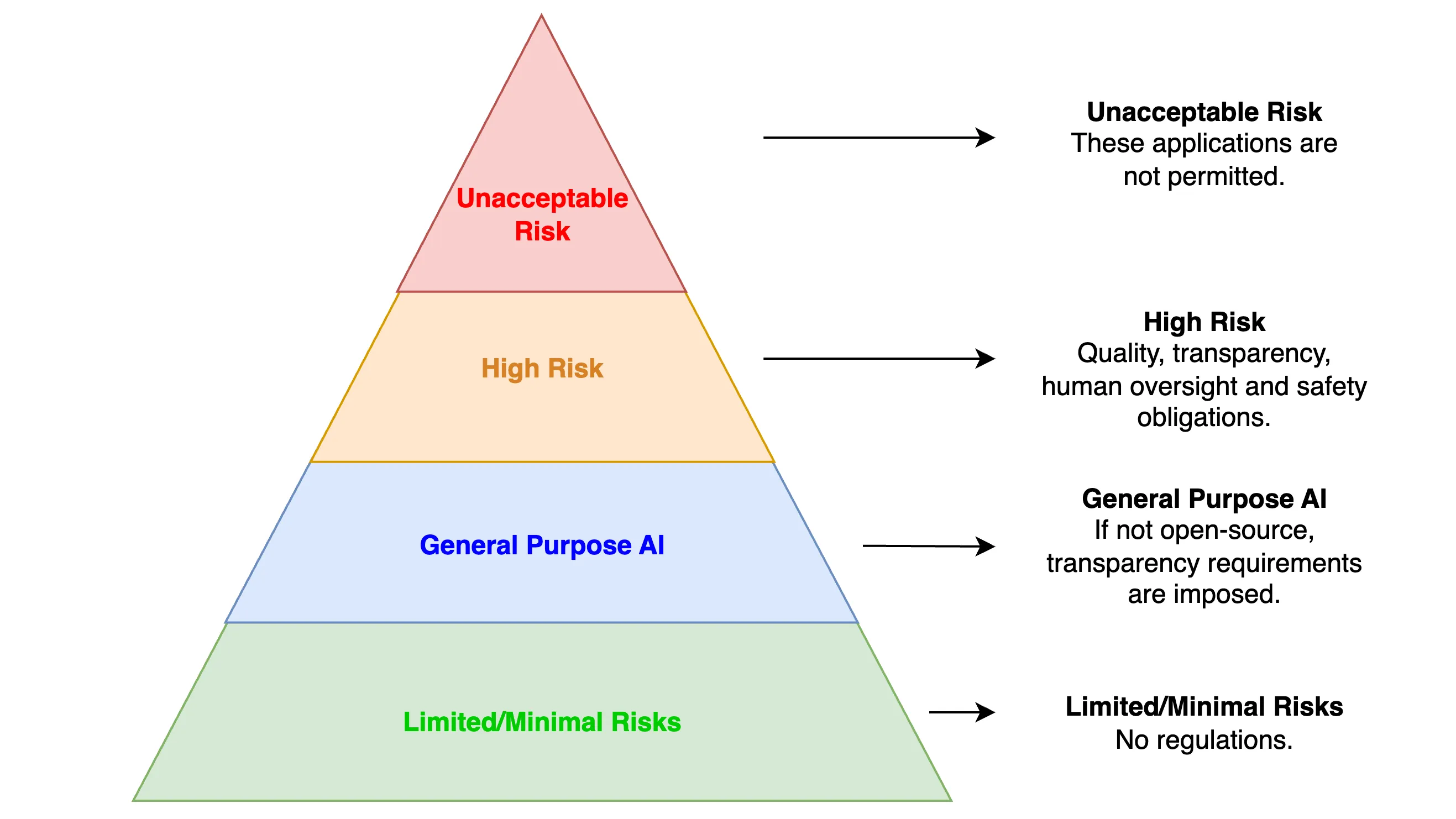

The EU’s AI Act, which came into force in August 2024, establishes a risk-based framework for the governance of artificial intelligence. Systems are classified according to their level of risk: “unacceptable risk” systems (such as government social scoring) are completely banned; “high-risk” systems (such as AI in healthcare or law enforcement) must comply with strict requirements on transparency, human oversight, and safety testing; “general-purpose AI,” such as Large Language Models (LLMs), must meet transparency obligations if not open-source, while “limited-risk” and “minimal-risk” applications are subject to lighter obligations.

Implementation will be gradual, with dedicated institutions such as the EU AI Office and the AI Board acquiring powers over the coming years. Penalties for non-compliance can reach up to 7% of global turnover, echoing the strict enforcement model of the GDPR.

In short, the EU’s regulatory model is comprehensive, centralized, and precautionary; designed to safeguard fundamental rights, ensure transparency, and manage risks proactively.

By contrast, the United States has preferred a “light-touch” approach to AI, one oriented first and foremost toward innovation. So far, the federal government has refrained from adopting broad legislation, limiting itself to issuing executive orders and agency guidelines to steer development.

President Biden’s Executive Order 14110 (November 2023) emphasized the importance of responsible AI innovation, tasking entities such as the National Institute of Standards and Technology (NIST) and the Department of Homeland Security (DHS) with creating standards and risk management frameworks. The difference from the EU’s AI Act is significant. While the EU AI Act provided a comprehensive framework with rules on what is allowed or prohibited, regulations, sanctions, and more, EO14110 merely initiated the process of defining guidelines and best practices.

This position shifted under President Trump’s administration with the launch of the AI Action Plan in 2025 through Executive Order 14179. This plan repealed Biden’s orders and focused on deregulation and competitiveness, signaling Washington’s intent to keep U.S. AI development agile and globally dominant:

This order revokes certain existing AI policies and directives that act as barriers to American AI innovation, clearing a path for the United States to act decisively to retain global leadership in artificial intelligence.

In summary, the U.S. philosophy remains centered on fostering innovation above all and allowing the market to evolve, introducing regulation only afterward to address specific harms.

For instance, several bills have been passed with targeted objectives. The Generative AI Copyright Disclosure Act requires companies developing generative AI models to provide a detailed list of copyrighted works used in their training sets (without limiting their use). The TAKE IT DOWN Act addresses the problem of deepfakes and revenge porn, seeking to give citizens tools to deal with such situations. Finally, the CREATE AI Act has promoted democratization of AI access in order to encourage research and development for the benefit of all U.S. citizens.

It should be noted that, overall, U.S. legislation seems to cover a broader range of AI-related issues compared to the EU AI Act, which, while unified, has a narrower scope of influence. However, this also potentially introduces greater confusion and contradictions due to the different language used in each bill and the lack of consistency or clear separation of application areas.

In the absence of a comprehensive federal law, U.S. states have filled the regulatory gap, and their initiatives increasingly resemble aspects of the European philosophy based on risk and rights. It is significant to note that all 50 states passed AI-related legislation in 2025.

For example, Tennessee’s ELVIS Act, in force since July 2024, protects musicians and artists from AI-generated imitations, penalizing the misuse of voice and likeness.

Similarly, Utah’s AI Policy Act requires companies to inform their customers when they are interacting with a generative AI system.

In May 2025, Montana’s HB178 bill regulated certain uses of AI classified as “Unacceptable” and “High Risk,” drawing a parallel with the EU’s AI Act.

Finally, several states are enacting laws in 2025 concerning the use of AI in healthcare.

As more and more states pass AI-related laws, the fragmented nature of U.S. governance becomes increasingly evident. What many of these initiatives share, however, is a focus on risk categories, transparency, and consumer protection; elements strongly emphasized in the EU’s AI Act.

Although it is unlikely that the United States will adopt a centralized Brussels-style framework in the near future, the EU’s influence is undeniable. American regulators and lawmakers are watching closely, and state-level actions are often justified as a way to “keep pace” with the global standards set by Europe.