How AI Learned to Harness Chaos

If you work with data, you have probably learned to see "noise" as your number one enemy. It is that random component, that imperfection that dirties datasets, hides patterns, and leads our models astray. The grainy image, the distorted audio signal, the imprecise sensor: for years, the main goal has been to filter, clean, and annihilate noise to achieve a pure signal.

But what if we told you that, in one of the most fascinating paradigm shifts in modern artificial intelligence, noise is no longer just the villain of the story? And what if, when controlled and used wisely, it could become one of the most powerful tools at our disposal?

Welcome to the noise paradox: from problem to solution.

The Dark Side of Noise: Anatomy of a Historic Enemy

In the context of Artificial Intelligence (AI), noise is any variation in the data that does not represent the true underlying pattern we are trying to model. Its impact is devastating for several reasons:

Masking Information: In its simplest form, an excessive level of noise can literally hide the useful information (the signal) present in the data. It's like trying to hear a whisper during a rock concert. If the signal-to-noise ratio is too low, the model has nothing significant to learn from.

Overfitting and Spurious Correlations: This is the most insidious danger. A model in the presence of noise, especially a very complex one, not only learns the signal but also learns the noise itself, treating it as if it were a fundamental feature. This leads to the creation of spurious correlations: the model associates the correct output with random occurrences present only in the training set. For example, it might learn that medical images with a particular light artifact (noise) belong to healthy patients, simply because that coincidence occurred by chance in the training dataset.

Model Instability: Models trained on noisy data can become extremely fragile. A minimal variation in the input, perhaps due to slightly different noise, can cause a drastic and unpredictable change in the output.

Over time, we have developed an arsenal of strategies to combat noise, which can be seen as an evolution of thought:

Phase 1: Pre-processing (Cleaning Upstream): The oldest approach, inherited from signal processing. The idea is to clean the data before the model sees it, with the goal of presenting the model with data that is as "pure" as possible.

Phase 2: Robust Statistics (Being Resilient): Rather than trying to clean every single outlier, this approach designs algorithms that are intrinsically "smarter": they know how to recognize and give less weight to extreme or clearly anomalous data, focusing on the general trend of the data.

Phase 3: Regularization (Forcing Simplicity): This has been a fundamental step in modern machine learning. Regularization techniques do not modify the data but add a "penalty" to the model's cost function if it becomes too complex. In practice, the model is forced to describe the data with the simplest possible functions, penalizing the choice of complex functions and thus pushing the model to capture only the main concepts present in the data.

All these strategies, while powerful and still in use today, start from a common assumption: noise is an adversary to be filtered, ignored, or suppressed.

But what happens when we completely change the rules of the game?

Controlled Noise: An Unexpected Ally

The breakthrough comes when we stop seeing noise only as a disturbance to be endured and start thinking of it as an element to be deliberately introduced. Adding a controlled amount of noise during training can have surprisingly positive effects. In training, the process by which a model searches for the best parameters, noise can help avoid getting stuck in "local minima." Imagine having to find the lowest point in a vast mountain range (our "error space") while blindfolded. You might end up in a small basin and think you have arrived, without knowing that a much deeper valley is just a little further away. A bit of noise, a random "push," can help you get out of the basin and continue exploring for a better solution.

One of the most well-known techniques is Data Augmentation. If we are training a model on data, instead of always showing it the same perfect examples, we provide it with slightly modified versions, adding small random perturbations. This forces the model to focus on the essential features and ignore irrelevant variations. The model becomes more robust and generalizes better.

Another fundamental technique in Deep Learning is Dropout. During training, at each step, some units (neurons) of the network are randomly "turned off." This prevents neurons from relying too heavily on their "colleagues" and pushes them to learn more independent and solid features. It's like training a soccer team by forcing players not to always pass the ball to the same person.

The Masterpiece of Controlled Chaos: A Deeper Look at Diffusion Models

While older techniques use noise like a pinch of salt to improve a recipe, Diffusion Probabilistic Models make noise the star ingredient. These models are the engine behind the incredible generative systems that can create stunningly realistic images, music, and more from simple text descriptions.

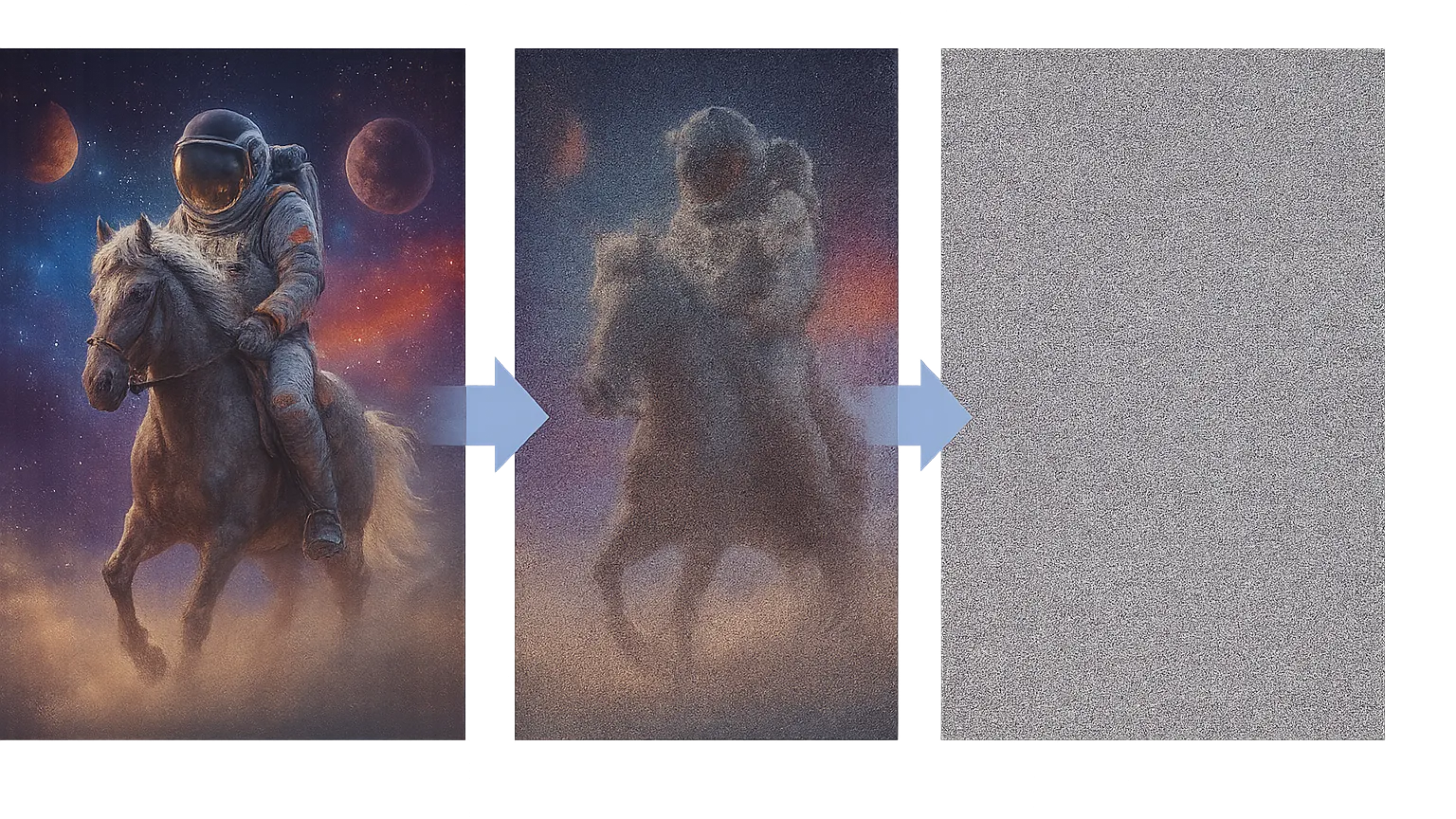

Their method is both clever and seemingly backward. Imagine you want to teach an AI how to create a complex and original painting of "an astronaut riding a horse." Instead of teaching it to paint from a blank canvas, you first teach it how to destroy thousands of existing images, and then, how to perfectly reverse that destruction.

This process unfolds in two key phases: destruction and creation phases.

1. The Forward Process (Destruction): From Image to Static

Think of this phase as slowly dissolving a masterpiece in digital acid. The AI starts with a clear, perfect image—let's say, a training photo of an astronaut riding a horse.

Step 1: It adds a tiny, almost unnoticeable amount of random noise (like a few specks of digital "sand") to the entire picture. The image is now 99% "astronaut riding a horse" and 1% noise.

Step 2: It takes that slightly noisy image and adds another handful of sand. Now it's 98% image, 2% noise. The picture is still visible, but getting fuzzier.

And so on... This process is repeated hundreds, or even thousands, of times for countless images. With each step, more noise is added, and the original image gradually fades.

Eventually, after enough steps, the original photograph is completely gone. What's left is a screen full of pure, random static, like an old TV with no signal. The astronaut and the horse have completely vanished into the noise.

This may seem pointless, but this is the crucial "training" phase. By methodically destroying the image step-by-step, the AI learns exactly how the structure, shapes, and colors of an "astronaut riding a horse" dissolve into chaos. It memorizes the entire process.

2. The Reverse Process (Creation): From Static to Masterpiece

Here is where the real magic happens. The AI, now an expert in the art of destruction, is tasked with doing the exact opposite. This is like a sculptor who can create a statue not by adding clay, but by starting with a solid block of marble and perfectly chipping away everything that isn't the statue.

The Starting Point: The process begins with a completely random canvas of noise—that same chaotic static from the end of the forward process. It contains no image, just random pixels.

The First Step of Denoising: The AI looks at the noisy canvas and asks itself, "Knowing exactly how an image of an 'astronaut riding a horse' dissolves, how can I adjust this static to perform the very last step of that destruction in reverse?" It then makes a tiny adjustment, removing the final layer of noise it would have added.

Iteration after Iteration: The process repeats. At each step, the canvas is a little less noisy. Vague shapes begin to appear from the randomness. The AI, constantly guided by the "astronaut" and "horse" concepts, refines these shapes. The "snow" of the static starts to condense into the outline of a helmet, then the shape of a horse's head. It knows what a horse's leg should look like next to an astronaut's boot because it learned those relationships during the destruction phase.

With each step of "denoising," the image becomes clearer and more defined. The AI isn't guessing; it's meticulously reversing a process it has mastered. After hundreds of these small refinement steps, the chaotic static has been transformed into a clear, coherent, and often breathtaking image that matches your description.

In essence, the model becomes so good at recognizing and removing noise that it can start with pure chaos and "remove" the noise in a highly controlled way to create something entirely new. It has learned the very essence of what an image is, allowing it to sculpt reality from randomness itself.

Conclusion: Embracing Imperfection

Noise is not just an obstacle. It is the starting point and the means through which a model learns the fundamental structure of data.

From an element that corrupts data, noise has transformed into a tool for creating more robust models, an aid for optimization, and, finally, the beating heart of some of the most creative generative models ever conceived. In order to remove noise effectively, the model must have developed an incredibly deep understanding of what constitutes "real" and coherent data.

Modern AI is not just learning to see through chaos, but it is learning to sculpt reality from chaos itself. And this, perhaps, makes it a little more like us.