One of the interesting challenges in computer science is translating concepts that are intuitive to humans into models a computer can understand. The great strength of Deep Learning lies precisely in the ability of neural networks to autonomously learn what matters in an image in order to assign it a label (e.g., dog/cat).

If we stop and think about it, it’s actually quite hard to specify exactly what distinguishes a dog from a cat, and it’s easy to find counterexamples to many of the features that might seem most intuitive (muzzle shape, pointed ears, size).

This translation challenge can be extended to countless concepts we use every day, but here we’ll focus on one in particular: privacy.

Teaching an Algorithm About Privacy

Privacy is a multifaceted concept, and each aspect certainly deserves its own in-depth discussion. In this article, we’ll focus on privacy in the context of AI and Machine Learning.

In particular, how can we teach an ML algorithm to be privacy-aware? And what does it actually mean for AI to “respect privacy”?

Let’s start with the second question: It has been known for several years that large datasets, even when anonymized, can contain enough information to re-identify individuals. A substantial body of research has shown that it is possible, for instance, to recover the identities of people from sensitive datasets (such as medical records).

Surprisingly, this privacy issue doesn’t apply only to datasets, which are no longer shared publicly (biomedical datasets, for example, require verified researcher access) but also to ML models trained on those datasets.

In fact, it is possible to extract information about the training data from the model itself. This type of technique is known as a Membership Inference Attack.

Differential Privacy

Is it possible to quantify how much information can be extracted from a dataset or a model? And how can we limit this information leakage?

Differential Privacy (DP) is a mathematical framework that provides formal, quantifiable guarantees about the privacy of individuals in a dataset, ensuring that no specific person’s information can be inferred from the data or from a model trained on it.

How it works

In practice, DP introduces a controlled amount of random noise into query responses or during model training.

This noise must ensure that the result of any query or inference is indistinguishable between two cases: one where a specific individual is included in the dataset and one where they are not. This must hold true for every person in the dataset.

Therefore no property exposed by the dataset or the model can be used to identify an individual. The key advantage of this approach is that the level of privacy is measurable and tunable.

In its simplest form, Differential Privacy can be tuned using the parameter ε (epsilon), which measures the level of privacy. Lower values indicate stronger protection (more noise, lower accuracy), while higher values mean weaker privacy in favor of model performance.

Why It Matters

Differential Privacy is now considered the gold standard for data protection because:

It offers stronger guarantees than simple anonymization, because it allows you to measure and control the desired level of privacy. Many anonymization techniques have proven to be ineffective for sufficiently large datasets.

It can be applied to modern machine learning systems, including LLMs, with an additional (time) cost only during the training phase, while inference remains equally efficient.

Companies such as Appleand Google have already introduced DP in their products and services to collect usage statistics or to train models securely. For example, Google’s language models that suggest words on the Android keyboard. The U.S. Census Bureau has also been using DP techniques for several years for the datasets it releases to the public.

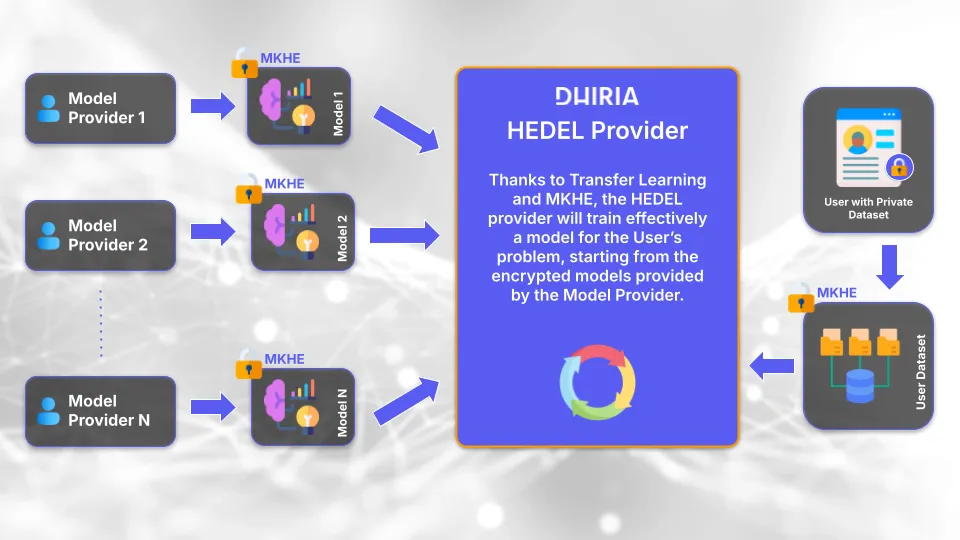

Dhiria’s Vision

At Dhiria, we work to make privacy-preserving AI a tangible reality. With solutions such as encrypted text classification and libraries based on homomorphic encryption, we enable clients to analyze sensitive data without exposing it.

Integrating Differential Privacy into this context means:

Secure analysis of sensitive datasets in domains such as healthcare and finance.

Model personalization with strong security guarantees.

DP does not replace encryption, it complements it: on one side we protect the data itself, and on the other we safeguard the privacy of the individuals those data represent.