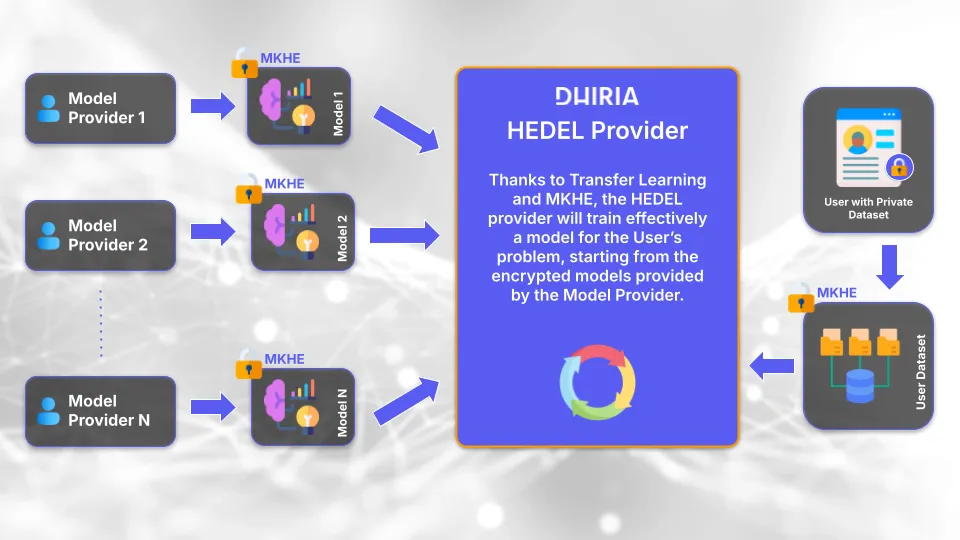

Is it possible to train machine learning models without looking at the training set?

It may sound like a paradox, and yet thanks to a cryptography technique called homomorphic encryption, at Dhiria we can do exactly that.

Normally, we tend to think of cryptography and machine learning as two very distant worlds: in the first, the goal is to extract as much information as possible from a dataset in order to make predictions; in the second, the objective is to minimize the amount of information exposed by data, often mixing it in ways that make it unrecognizable.

These two goals seem to be in direct conflict with each other, but are they really?

A bit of cryptography first

Let’s dig deeper into the rough definition of cryptography: if it’s true that its purpose is to hide data from prying eyes, we need to add an extra piece: this protection is tied to the use of a secret key. Whoever holds the key must be able to access the data; otherwise encrypting something wouldn’t be very useful.

So yes, cryptography does “mix up” the data into something unrecognizable, but only for those who don’t possess the secret key.

Some cryptographic protocols (AES, for instance) encrypt data by scrambling it in such a way that no operations can be performed on it, or better, any operation would turn the data into random noise.

These protocols, if combined with other control mechanisms such as a checksums, don’t just guarantee the Confidentiality, but also the Integrity of the data

(CIA triad - https://informationsecurity.wustl.edu/items/confidentiality-integrity-and-availability-the-cia-triad/).

Homomorphic encryption protocols, however, scramble data in such a way that the modification applied to the encrypted data can be mapped back to a predictable modification of the original data, even without knowing the original data itself.

This also means that the desirable property of randomness (i.e., random modifications of the data when altering its encrypted version) is no longer valid, since the data can be altered in a specific way without destroying its entire structure.

It therefore becomes less straightforward to introduce techniques for checking the integrity of encrypted data, even though solutions have already been proposed.

How does it work in practice?

The functioning of homomorphic encryption relies on vectors in high-dimensional spaces. Each message we want to send is transformed into one such vector.

The encryption phase simply applies a transformation to the vector, and this same transformation is then applied to every piece of data we want to use for ML.

Now between vectors (encrypted data), we can perform two fundamental operations: addition and multiplication.

These two operations alone make it possible to execute many ML models using only encrypted vectors.

A person without access to the secret key cannot retrieve the underlying data from the vectors. Yet, thanks to the public key, they can still take two vectors and perform operations on them.

Let’s consider a very simple example:

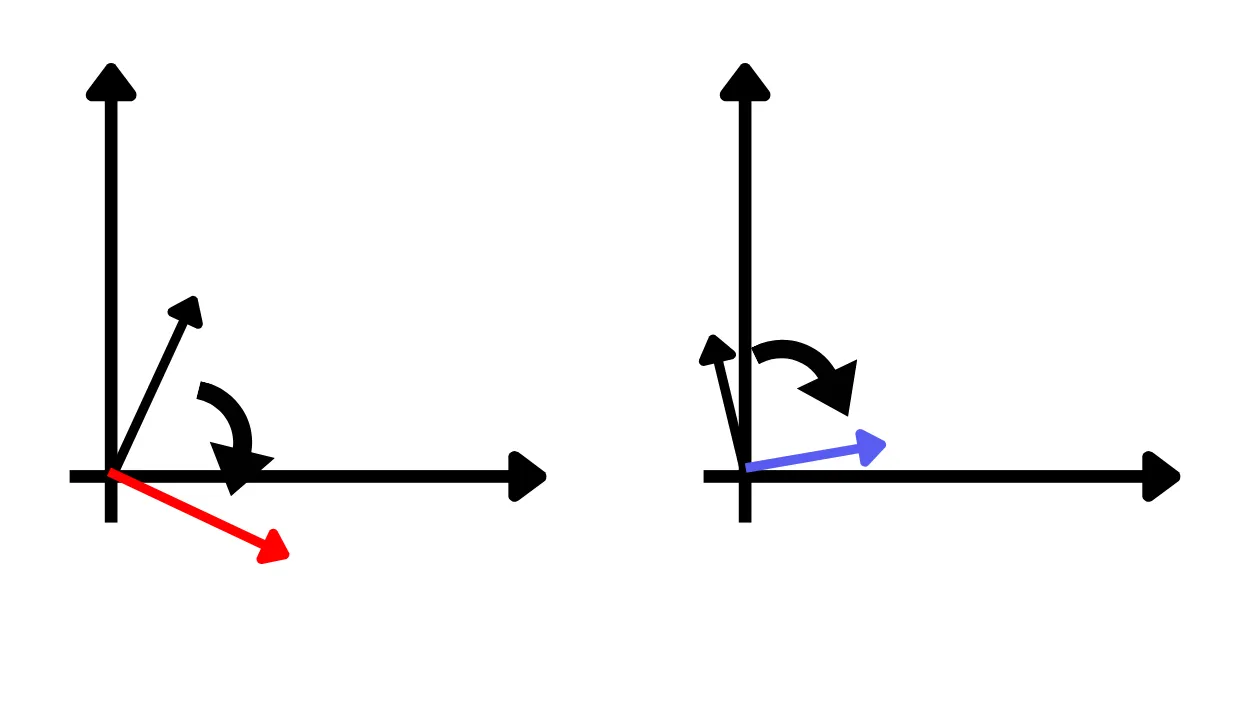

Imagine that the encryption operation consists in simply rotating a vector, and that the secret key is the value of the rotation angle.

Suppose we use a 90° angle as our secret key. The steps are:

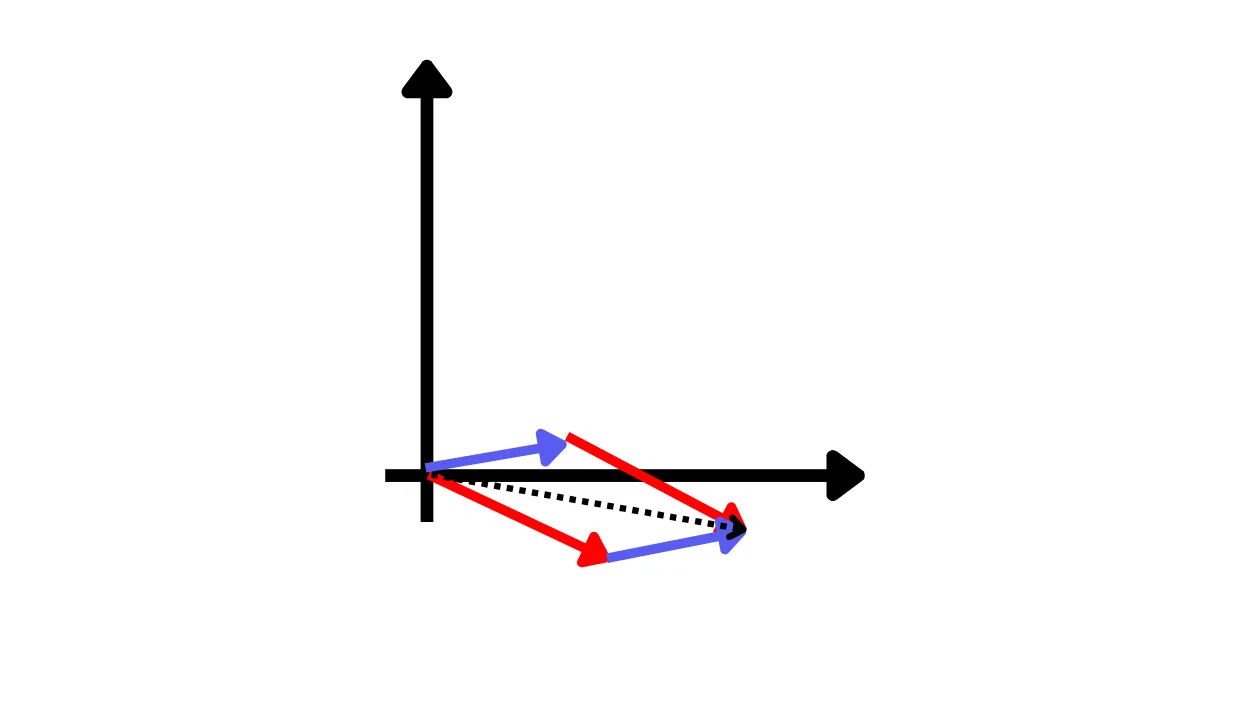

Encrypt two vectors (in black) by rotating them 90°.

Send the two encrypted vectors to another person and ask them to compute the sum.

Receive the result.

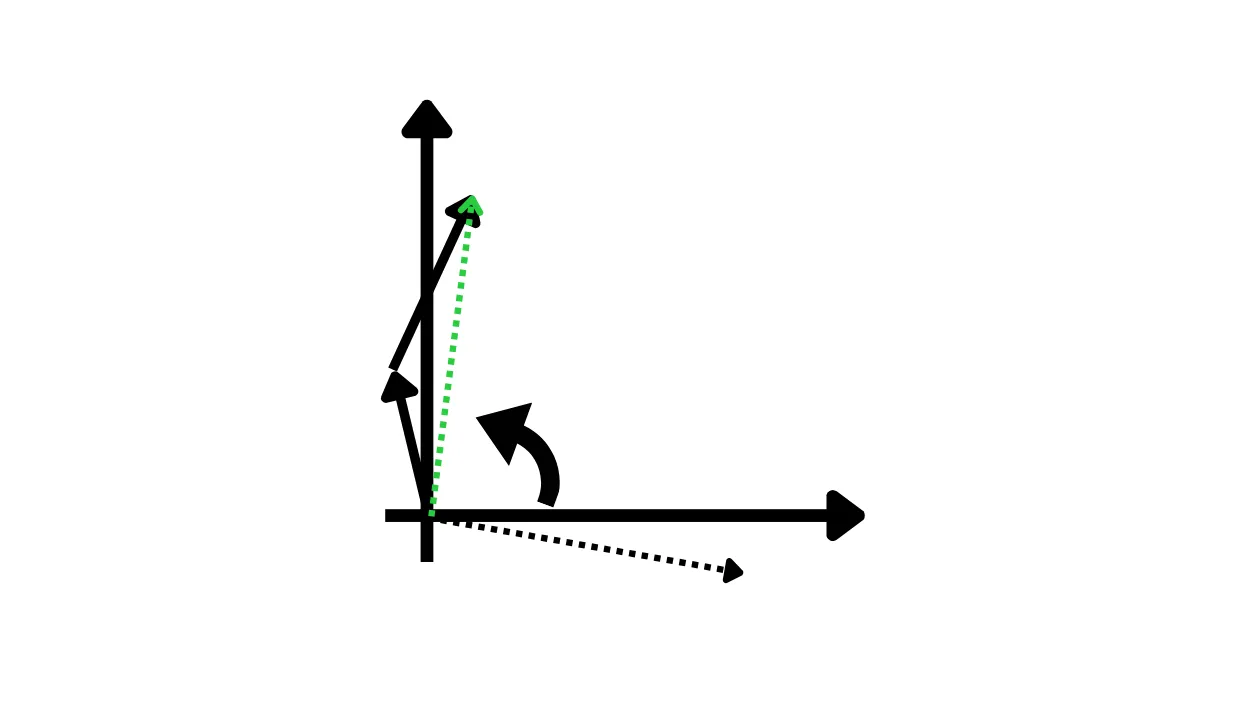

Decrypt by rotating back in the opposite direction (90°), obtaining the result (in green).

Notice how the final result corresponds exactly to the vector we would have computed ourselves if we had summed the two original vectors.

At the same time, the person who received the rotated vectors does not know by how much they were rotated, and therefore cannot know what the original vectors were. Yet, they are still able to perform the sum operation correctly.

Of course, this is a heavily simplified model and not secure at all (since the vector length and relative angle is exposed), but the core idea is fundamentally the same, only with more complex transformations and additional intermediate steps.

🔐 Guard yourself from the threats of tomorrow

Dhiria builds privacy-preserving machine learning solutions powered by cutting-edge cryptographic techniques like homomorphic encryption. We help organizations securely process sensitive data with future-proof, compliance-ready architectures.

👉 Discover how we can help you protect your data even during processing!

Visit www.dhiria.com or contact us at info@dhiria.com for a personalized demo.